Student projects

In 2008–2012, I have supervised a total of eight final-year undergraduate projects and one Master’s project.

This page briefly describes each project’s aims and motivations.

Shader compositor

Joseph Seaton, BA 2012

Designing shaders can be laborious work, involving endless back and forth between tweaking the code (often minor visual tweaks) and examining the visual output. While programs exist to provide previews of individual shaders, modern software, especially for computer game engines, often involves multiple shaders chained together, and little software exists to aid shader writers in testing such shader pipelines. Furthermore for beginners, the initial process of learning of how to use shaders is complicated by this extra legwork to compose shaders.

Therefore, this project provides a simple interface to allow a user to easily create a pipeline of shaders, which are written in a slightly annotated version of GLSL. A GLSL parser extracts the attributes of shaders and their types, and JavaScript is used to programmatically connect attributes to create a pipeline of shaders.

Converting anaglyph 3D to stereoscopic 3D

James Neve, BA 2011

Stereoscopic 3D films are becoming increasingly popular, and the film studios are keen to release films in 3D. However, the lack of suitable home cinema equipment leads to some films being released in anaglyph versions. While these work with every DVD player and TV, they require special red-cyan or green-magenta glasses, which result in strong viewing discomfort. This project presents approaches to convert anaglyph 3D videos to stereoscopic 3D videos with full-colour left and right views, by using motion estimation and stereo matching techniques respectively.

Received a project dissertation prize.

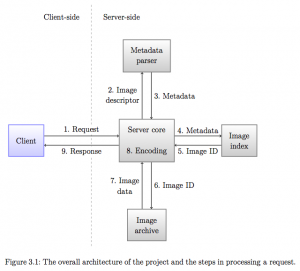

Streaming videos of solar imaging data

Ludwig Schmidt, BA 2011

This project designed and implemented a client-server streaming architecture for visual inspection of the large amounts of data captured by modern solar observatories such as NASA’s Solar Dynamics Observatory. Clients request a subset of the image database, and the server encodes the stream of images according to the H.264 standard on-the-fly in order to achieve a high compression ratio. The resulting bandwidth requirement is up to five times lower than a baseline of individually encoded JPEG images. Moreover, the server is able to encode a 1024 × 1024 pixel video stream in real-time at about 30 frames per second.

GPU motion estimation for H.264

unfinished

This project implements motion estimation for H.264 in a stream processing language, so that it can run on a modern GPU. In most software H.264 encoders, the motion estimation is done entirely on the CPU, so shifting some of this load onto the GPU will improve the encoder: either by allowing it to run faster or do a better encode (or a trade-off between the two). The motion search algorithm needs to be chosen carefully to allow the massive parallelism that the GPU requires to run the code efficiently.

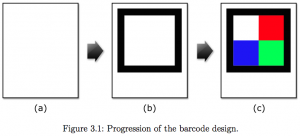

Lecture voting system

Mark Wheeler, BA 2010

Many lectures and seminars may need to conduct a vote to gather information from the audience. These lecture theatres often have camera equipment installed, but usually conduct votes using either electronic buttons or simple vote counting. This project uses computer vision techniques to detect votes using camera equipment to remove the need for extra electronic equipment. Each user has a unique barcode that is detected when they hold it towards the camera and this is counted as a vote. The real-time implementation of this project allows users to vote interactively, and also to allows them to bid and play multiplayer games in real-time.

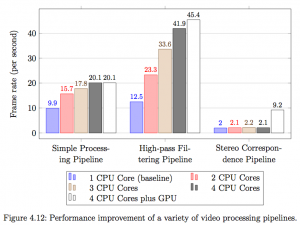

A GPU-enabled real-time video processing library

Rubin Xu, BA 2010

Computer graphics and computer vision often deal with image processing tasks. Although the details of these tasks vary, many of them follow a general architecture – an image/video processing pipeline. It is desirable to have a extensible framework which can assist the user in creating and using such pipelines. Moreover, the framework needs to keep video pipelines running at their designated frame rate, which could potentially require a lot of computational power. Therefore, it is desirable to utilise all possible computational power to boost performance in order to achieve real-time processing. In a modern PC, there are often two major “computing engines” available, namely a multi-core processor and a programmable GPU.

Received a project dissertation prize.

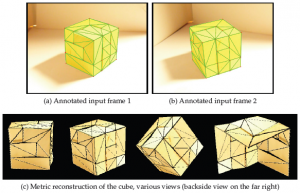

Interactively guided structure-from-motion

Malte Schwarzkopf, BA 2009

User-created 3D content has become increasingly ubiquitous in virtual worlds such as Google Earth or SecondLife. Nonetheless, the process of building a 3D model of a real-world object generally still involves tedious manual labour.

This project presents a novel approach that unifies automatic structure-from-motion and interactive user input, making use of the human perception of an object to improve results. At the same time, an intuitive front-end allows a user to merely trace the outlines of an object in order to generate a 3D model of it.

Received a project dissertation prize.

Published as a poster at the Vision, Modelling and Visualisation Workshop 2009 in Braunschweig, Germany.

Automatic people removal from photographs

Lech Świrski, BA 2009

A common problem when taking photographs of public locations is that the view of at least some part of the scene is invariably blocked by a pedestrian. However, under the assumption that pedestrians move whereas stationary objects do not, with sufficient photos of the target one should have enough data to construct an unobstructed image of the target. But problems arise if the photos are from slightly different vantage points, taken with different settings (which is indeed likely for most cameras when set to auto).

This project resolves these problems in an automated manner. It aligns the input images if necessary, select the pedestrians and then replace them with the corresponding area from another photo, all in such a way as for the resulting image to look authentic.

Received a project dissertation prize.

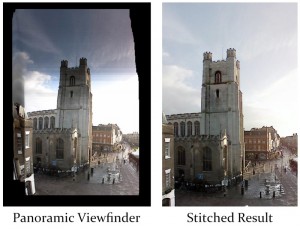

Panorama Viewfinding

Aloysius Han, BA 2009

The field of view of today’s cameras is fairly limited, and panoramas are one way of cheaply extending the field of view of digital photos. A panoramic image corresponds more closely to the human field of view and hence offers better real-world to image world correspondence. By capturing pictures with a field of view that is similar to that of human vision it allows for a more immersive image.

Rather than manually capturing a panorama and leaving the completeness of a panorama to chance, this project develops a “panorama viewfinder”. The viewfinder displays a live preview of the panorama, and extracts key photos automatically if they cover areas that have not been captured yet. This allows the photographer to sweep the camera back and forth over the scene to easily capture a complete panorama.