Dr. Christian Richardt

Christian Richardt is a Research Scientist at Reality Labs Research in Pittsburgh. He was previously a Reader (=Associate Professor) and EPSRC-UKRI Innovation Fellow in the Visual Computing Group, the CAMERA Centre and REVEAL at the University of Bath. His research interests cover the fields of image processing, computer graphics and computer vision, and his research combines insights from vision, graphics and perception to reconstruct visual information from images and videos, to create high-quality visual experiences with a focus on 6-degree-of-freedom VR video.

Christian Richardt is a Research Scientist at Reality Labs Research in Pittsburgh. He was previously a Reader (=Associate Professor) and EPSRC-UKRI Innovation Fellow in the Visual Computing Group, the CAMERA Centre and REVEAL at the University of Bath. His research interests cover the fields of image processing, computer graphics and computer vision, and his research combines insights from vision, graphics and perception to reconstruct visual information from images and videos, to create high-quality visual experiences with a focus on 6-degree-of-freedom VR video.

Christian was previously a postdoctoral researcher working on user-centric video processing and motion capture with Christian Theobalt at the Intel Visual Computing Institute at Saarland University and also in the Graphics, Vision and Video group at Max-Planck-Institut für Informatik in Saarbrücken, Germany. Previously, he was a postdoc in the REVES team at Inria Sophia Antipolis, France, working with George Drettakis and Adrien Bousseau, and he interned with Alexander Sorkine-Hornung at Disney Research Zurich where he worked on Megastereo panoramas.

Christian graduated with a PhD and BA from the University of Cambridge in 2012 and 2007, respectively. His PhD in the Computer Laboratory’s Rainbow Group was supervised by Neil Dodgson. His doctoral research investigated the full life cycle of videos with depth (RGBZ videos): from their acquisition, via filtering and processing, to the evaluation of stereoscopic display.

News

- November 2023

-

Three papers accepted:

1. Neural Feature Filtering for Faster Structure-from-Motion Localisation (BMVC 2023 in Aberdeen),

2. PyNeRF: Pyramidal Neural Radiance Fields (NeurIPS 2023 in New Orleans), and

3. VR-NeRF: High-Fidelity Virtualized Walkable Spaces (SIGGRAPH Asia 2023 in Sydney).Check out our new Eyeful Tower dataset, the highest-resolution, highest-quality, high-dynamic range, multi-view indoor scene dataset powering VR-NeRF.

- October 2023

- We presented one paper on Neural Fields for Structured Lighting at ICCV 2023 in Paris.

- June 2023

-

We held DynaVis, the Fourth International Workshop on Dynamic Scene Reconstruction at CVPR 2023 in Vancouver (afternoon of Monday 19 June).

We presented two papers at CVPR 2023 in Vancouver: HyperReel and Neural Duplex Radiance Fields for high-fidelity and real-time view synthesis, respectively.

- March 2023

- I will be serving as an Area Chair for ICCV 2023.

- June 2022

- We presented our work on high-resolution 360° monocular depth estimation (360MonoDepth) at CVPR 2022 in New Orleans.

- April 2022

- I’m excited to join Reality Labs Research in Pittsburgh as a Research Scientist Lead.

- March 2022

- Our work on high-resolution 360° monocular depth estimation (360MonoDepth) will appear at CVPR 2022.

- December 2021

-

We are presenting our work on time-of-flight NeRF for dynamic scenes at NeurIPS 2021.

I co-chaired the CVMP 2021 conference in London, the first ACM SIGGRAPH affiliated conference to be back in person.

- October 2021

-

Our work on time-of-flight NeRF for dynamic scenes will appear at NeurIPS 2021.

We have a paper on 360° optical flow accepted at BMVC 2021.

I am delighted that 3DV 2021 will be free to attend thanks to our generous sponsors!

Selected publications

Linning Xu, Vasu Agrawal, William Laney, Tony Garcia, Aayush Bansal, Changil Kim, Samuel Rota Bulò, Lorenzo Porzi, Peter Kontschieder, Aljaž Božič, Dahua Lin, Michael Zollhöfer and Christian Richardt

SIGGRAPH Asia 2023

Benjamin Attal, Jia-Bin Huang, Christian Richardt, Michael Zollhöfer, Johannes Kopf, Matthew O’Toole and Changil Kim

CVPR 2023 (highlight, 2.6% acceptance rate)

Ziyu Wan, Christian Richardt, Aljaž Božič, Chao Li, Vijay Rengarajan, Seonghyeon Nam, Xiaoyu Xiang, Tuotuo Li, Bo Zhu, Rakesh Ranjan and Jing Liao

Conference on Computer Vision and Pattern Recognition (CVPR) 2023

Hyeonjoong Jang, Andréas Meuleman, Dahyun Kang, Donggun Kim, Christian Richardt and Min H. Kim

ACM Transactions on Graphics (Proceedings of SIGGRAPH 2022)

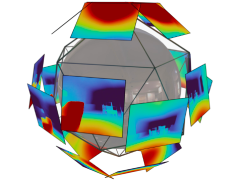

Manuel Rey-Area*, Mingze Yuan* and Christian Richardt

Conference on Computer Vision and Pattern Recognition (CVPR) 2022

Benjamin Attal, Eliot Laidlaw, Aaron Gokaslan, Changil Kim, Christian Richardt, James Tompkin and Matthew O’Toole

Advances in Neural Information Processing Systems (NeurIPS) 2021

Tobias Bertel, Mingze Yuan, Reuben Lindroos and Christian Richardt

ACM Transactions on Graphics (Proceedings of SIGGRAPH Asia 2020)

Thu Nguyen-Phuoc, Christian Richardt, Long Mai, Yong-Liang Yang and Niloy Mitra

Advances in Neural Information Processing Systems (NeurIPS) 2020

Christian Richardt, James Tompkin and Gordon Wetzstein

Book chapter in Real VR – Immersive Digital Reality, Springer 2020

Thu Nguyen-Phuoc, Chuan Li, Lucas Theis, Christian Richardt and Yong-Liang Yang

International Conference on Computer Vision (ICCV) 2019

Christian Richardt, Peter Hedman, Ryan S. Overbeck, Brian Cabral, Robert Konrad and Steve Sullivan

Course at SIGGRAPH 2019

George Koulieris, Kaan Akşit, Michael Stengel, Rafał K. Mantiuk, Katerina Mania and Christian Richardt

Computer Graphics Forum (Eurographics 2019 State-of-the-Art Report)

Hyeongwoo Kim, Pablo Garrido, Ayush Tewari, Weipeng Xu, Justus Thies, Matthias Nießner, Patrick Pérez, Christian Richardt, Michael Zollhöfer and Christian Theobalt

ACM Transactions on Graphics (Proceedings of SIGGRAPH 2018)

Christian Richardt, Yael Pritch, Henning Zimmer and Alexander Sorkine-Hornung

Proceedings of CVPR 2013 (oral presentation, 3.3% acceptance rate)